IEEE websites place cookies on your device to give you the best user experience. By using our websites, you agree to the placement of these cookies. To learn more, read our Privacy Policy.

National e-currencies aim to centralize money again

In China, users can pay with e-CNY, the digital currency issued by China’s central bank.

The rise of cryptocurrencies is rewriting long-standing ideas about how money should work. Eager not to get left behind, central banks around the world are starting to develop their own digital currencies. These new forms of cash could boost financial inclusion, slash payment fees, and make money smarter, say experts, but they also present significant risks.

In most developed economies, the production and distribution of money has been the sole remit of central banks for at least a century. That was turned on its head in 2009 with the launch of Bitcoin, which uses blockchain technology to delegate the minting and governance of the digital currency to a decentralized network of volunteers.

Since then a host of new cryptocurrencies has emerged, promising a fast, cheap, and secure way to transfer money directly between users without relying on banks or payment providers. Second-generation cryptocurrencies like Ether also introduced the idea of programmable money, making it possible to create smart contracts that automate the execution of financial agreements.

“We have to be very careful in not ascribing superpowers to central bank digital currencies, when government has these powers over your existing bank accounts.”

—Rich Turrin, author and fintech consultant

Volatile prices and regulatory uncertainty have limited their adoption as a practical medium of payment, but the underlying technology has led to a major rethink of what money should look like in the digital age. “They proved that there can be a new way to organize money and make payments, and that this can be widely adopted,” says Andreas Veneris, a professor of computer engineering at the University of Toronto, who has advised the Bank of Canada on digital currencies.

Now countries around the world are borrowing from crypto’s playbook to develop digital versions of national currencies. China has long been the global leader in this field, starting research on digital currencies in 2014 and launching pilots of its digital yuan in four cities in late 2019.

But in October 2020, the Bahamas became the first country to roll out a central bank digital currency (CBDC) nationwide, and since then digital versions of the East Caribbean dollar, Nigeria’s naira, and the Jamaican dollar have all launched. An executive order from the Biden administration in March called for the U.S. Treasury Department to investigate the possibility of a digital dollar, and according to the Atlantic Council, more than 50 countries are in advanced stages of exploring CBDCs.

One of the primary motivations is to improve the cost, speed, and flexibility of digital payment, says Veneris. “Payment systems today are expensive; they are clumsy and slow,” he says, pointing out that much of the underlying technology is more than 40 years old. Much like cryptocurrencies, CBDCs could make it possible to instantly transfer money between people without relying on third parties, resulting in cheaper, faster payments.

They could also open up novel possibilities for revamping social welfare and financial inclusion, says Veneris, by enabling direct cash transfers to people regardless of whether they have a bank account. Smart-contract technology could also make it possible to ensure welfare money is spent only on permitted items, such as food or medicine.

China’s digital yuan is the largest-scale experiment in CBDCs to date. The project expanded to 23 cities by the end of last year. Total transactions had crossed 87.6 billion yuan with 261 million wallets opened, according to the the People’s Bank of China (PBOC). Use accelerated in 2022 with total transaction volume in the first five months hitting 83 billion yuan. And it makes an interesting case study, says Rich Turrin, a Shanghai-based fintech consultant and author of Cashless: China’s Digital Currency Revolution, as other CBDCs will likely follow a similar blueprint. “Most of them are going to look pretty similar, because of the job they’re designed to do,” he says.

The digital yuan is the government’s response to an economy digitizing far faster than other developed countries, says Turrin. Fifty-two percent of Chinese retail transactions last year occurred online, compared to around 13 percent in the United States. The currency is aimed at fostering this digital economy, boosting financial inclusion among digital laggards, and proving a backup system in case the leading private payment providers WeChat and Alipay go down, says Turrin.

“The air gap between the central bank, the banking system, your wallet, and your money is a feature, not a bug.”

—Dante Disparte, Circle

The coin itself is stored as a digital token on an electronic wallet—either one designed by the PBOC or the WeChat app. It can also be stored on specially designed payment cards that allow offline transfers for those without smartphones. To open a wallet, a user has to verify their identity with a bank, which maintains a record of who owns the wallet. For lower-value transactions, users can transfer directly to another person’s wallet by simply connecting their phones, and these transactions are effectively anonymous, says Turrin. The digital tokens encode a cryptographically protected record of transactions in much the same way as cryptocurrencies do to ensure transfers can’t be faked.

For transactions above roughly US $300, the wallet has to connect to a centralized government system that carries out fraud and anti-money-laundering checks and stores a record of the transaction. If the government suspects foul play, it can apply for a warrant to get banks to reveal the identity of those involved in transactions.

This has led to accusations that the digital yuan is designed to be an economic surveillance tool, something critics fear could be replicated elsewhere. But Turrin says that China’s recently enacted Personal Information Protection Law provides some of the strictest privacy protections in the world. Officials, for one, can’t access wallet holders’ details without going through the courts. Turrin also points out that both the Chinese government and most foreign ones can already obtain warrants to access citizens’ conventional financial records. “We have to be very careful in not ascribing superpowers to CBDCs, when government has these powers over your existing bank accounts,” he says.

Fears have been raised that CBDCs like the digital yuan could destabilize the private banking system by encouraging people to transfer their deposits into safer, central-bank-backed wallets. The design of the digital yuan makes this unlikely, though, says Turrin, because it can’t earn interest and there is a limit to how much people can transfer, which are design choices that similar projects will likely mimic.

“Who wants to take the societal bet that the aggregation of all of that retail economic activity isn’t one day used for ill?”

—Dante Disparte, Circle

But this raises questions over whether central banks are straying outside their remit when it comes to CBDCs, says Dante Disparte, chief strategy officer at Circle, the company behind the USDC stablecoin, whose value is pegged to the dollar. The primary role of a central bank is to ensure sustainable economic growth by controlling the supply of money, and making low-level decisions about how citizens use their cash is well outside their core competencies, he says.

“If you're setting micro-level, digital-wallet-level retail payments policy, then you stopped being a central bank and you started becoming a high street bank,” says Disparte. “The air gap between the central bank, the banking system, your wallet, and your money is a feature, not a bug.”

Public-sector organizations don’t have a great record when it comes to digital transformation, says Disparte, so expecting them to make the right calls on the future of digital payments seems unwise. A centralized CBDC creates considerable cybersecurity and obsolescence risks, he adds, and if it becomes the dominant standard for electronic money, people will have few alternatives if it fails.

He believes the government’s role should be to set guardrails on private-sector innovation rather than trying to manage the transition to digital cash from the top down. “The government should not be in the business of competing with the free market; it should be in the business of creating boundaries for rules-based innovation,” he says. “[CBDCs] would be the equivalent of the aviation-safety authorities choosing to fly planes and build jet engines.”

And no matter how robust protections around CBDCs are, Disparte says, a fully traceable digital currency may prove too tempting a tool for the authorities. “Who wants to take the societal bet that the aggregation of all of that retail economic activity isn’t one day used for ill?” he says.

Veneris questions whether people should be any happier entrusting their financial history to the private sector, given high-profile cases of big tech firms exploiting user data for nefarious purposes. But he agrees that a CBDC does have the potential to give governments unprecedented control over citizens.

One of the beauties of the cryptographic building blocks that underpin digital currencies, though, is the ability to encode rules into the way they work that can be easily audited and verified. This programmability opens the prospect of writing financial regulations directly into digital currencies both to protect citizens and prevent illicit behavior, in a way that enables direct public oversight.

“I can prove to you that the code is correct, I can prove to you that the code represents what I told you it represents, cryptographically,” he says. “If you introduce all these cryptographic principles, the CBDCs are going to always be slightly slower, but it will be more fair.”

Edd Gent is a freelance science and technology writer based in Bangalore, India. His writing focuses on emerging technologies across computing, engineering, energy and bioscience. He's on Twitter at @EddytheGent and email at edd dot gent at outlook dot com. His PGP fingerprint is ABB8 6BB3 3E69 C4A7 EC91 611B 5C12 193D 5DFC C01B. His public key is here. DM for Signal info.

If technologists can’t perfect it, quantum computers will never be big

Dates chiseled into an ancient tombstone have more in common with the data in your phone or laptop than you may realize. They both involve conventional, classical information, carried by hardware that is relatively immune to errors. The situation inside a quantum computer is far different: The information itself has its own idiosyncratic properties, and compared with standard digital microelectronics, state-of-the-art quantum-computer hardware is more than a billion trillion times as likely to suffer a fault. This tremendous susceptibility to errors is the single biggest problem holding back quantum computing from realizing its great promise.

Fortunately, an approach known as quantum error correction (QEC) can remedy this problem, at least in principle. A mature body of theory built up over the past quarter century now provides a solid theoretical foundation, and experimentalists have demonstrated dozens of proof-of-principle examples of QEC. But these experiments still have not reached the level of quality and sophistication needed to reduce the overall error rate in a system.

The two of us, along with many other researchers involved in quantum computing, are trying to move definitively beyond these preliminary demos of QEC so that it can be employed to build useful, large-scale quantum computers. But before describing how we think such error correction can be made practical, we need to first review what makes a quantum computer tick.

Information is physical. This was the mantra of the distinguished IBM researcher Rolf Landauer. Abstract though it may seem, information always involves a physical representation, and the physics matters.

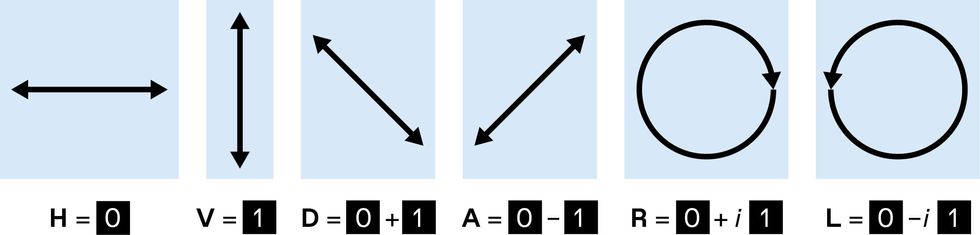

Conventional digital information consists of bits, zeros and ones, which can be represented by classical states of matter, that is, states well described by classical physics. Quantum information, by contrast, involves qubits—quantum bits—whose properties follow the peculiar rules of quantum mechanics.

A classical bit has only two possible values: 0 or 1. A qubit, however, can occupy a superposition of these two information states, taking on characteristics of both. Polarized light provides intuitive examples of superpositions. You could use horizontally polarized light to represent 0 and vertically polarized light to represent 1, but light can also be polarized on an angle and then has both horizontal and vertical components at once. Indeed, one way to represent a qubit is by the polarization of a single photon of light.

These ideas generalize to groups of n bits or qubits: n bits can represent any one of 2n possible values at any moment, while n qubits can include components corresponding to all 2n classical states simultaneously in superposition. These superpositions provide a vast range of possible states for a quantum computer to work with, albeit with limitations on how they can be manipulated and accessed. Superposition of information is a central resource used in quantum processing and, along with other quantum rules, enables powerful new ways to compute.

Researchers are experimenting with many different physical systems to hold and process quantum information, including light, trapped atoms and ions, and solid-state devices based on semiconductors or superconductors. For the purpose of realizing qubits, all these systems follow the same underlying mathematical rules of quantum physics, and all of them are highly sensitive to environmental fluctuations that introduce errors. By contrast, the transistors that handle classical information in modern digital electronics can reliably perform a billion operations per second for decades with a vanishingly small chance of a hardware fault.

Of particular concern is the fact that qubit states can roam over a continuous range of superpositions. Polarized light again provides a good analogy: The angle of linear polarization can take any value from 0 to 180 degrees.

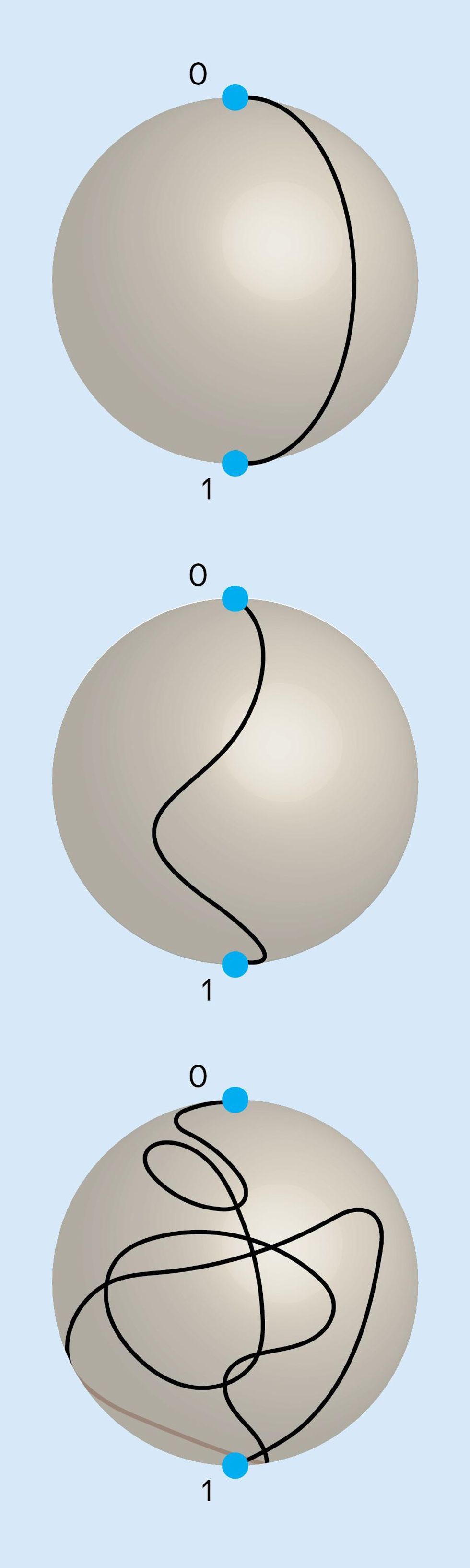

Pictorially, a qubit’s state can be thought of as an arrow pointing to a location on the surface of a sphere. Known as a Bloch sphere, its north and south poles represent the binary states 0 and 1, respectively, and all other locations on its surface represent possible quantum superpositions of those two states. Noise causes the Bloch arrow to drift around the sphere over time. A conventional computer represents 0 and 1 with physical quantities, such as capacitor voltages, that can be locked near the correct values to suppress this kind of continuous wandering and unwanted bit flips. There is no comparable way to lock the qubit’s “arrow” to its correct location on the Bloch sphere.

Early in the 1990s, Landauer and others argued that this difficulty presented a fundamental obstacle to building useful quantum computers. The issue is known as scalability: Although a simple quantum processor performing a few operations on a handful of qubits might be possible, could you scale up the technology to systems that could run lengthy computations on large arrays of qubits? A type of classical computation called analog computing also uses continuous quantities and is suitable for some tasks, but the problem of continuous errors prevents the complexity of such systems from being scaled up. Continuous errors with qubits seemed to doom quantum computers to the same fate.

We now know better. Theoreticians have successfully adapted the theory of error correction for classical digital data to quantum settings. QEC makes scalable quantum processing possible in a way that is impossible for analog computers. To get a sense of how it works, it’s worthwhile to review how error correction is performed in classical settings.

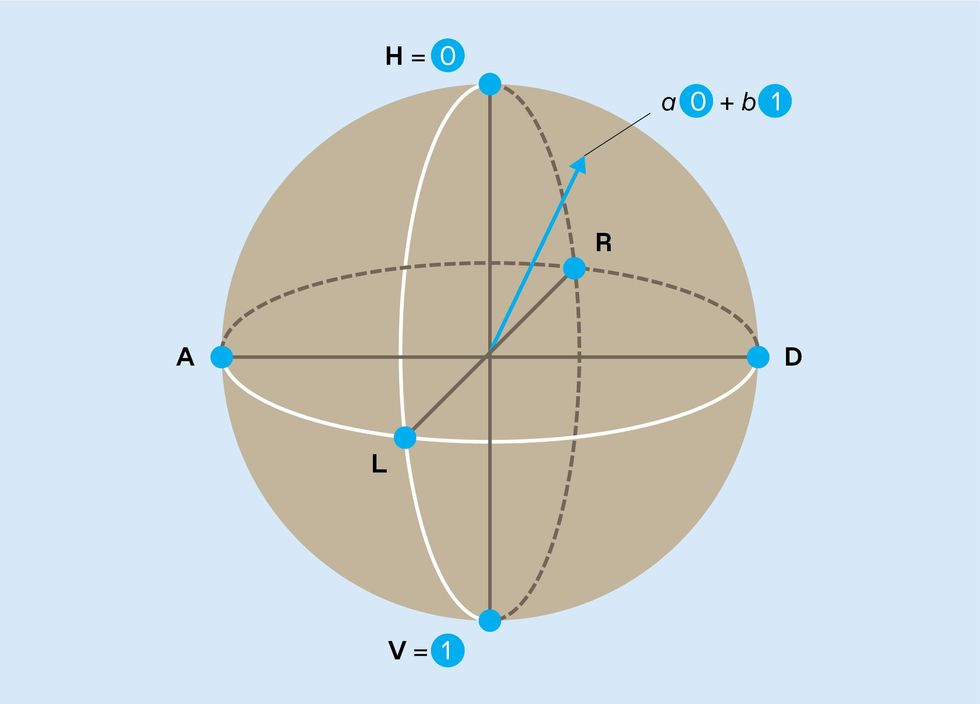

Simple schemes can deal with errors in classical information. For instance, in the 19th century, ships routinely carried clocks for determining the ship’s longitude during voyages. A good clock that could keep track of the time in Greenwich, in combination with the sun’s position in the sky, provided the necessary data. A mistimed clock could lead to dangerous navigational errors, though, so ships often carried at least three of them. Two clocks reading different times could detect when one was at fault, but three were needed to identify which timepiece was faulty and correct it through a majority vote.

The use of multiple clocks is an example of a repetition code: Information is redundantly encoded in multiple physical devices such that a disturbance in one can be identified and corrected.

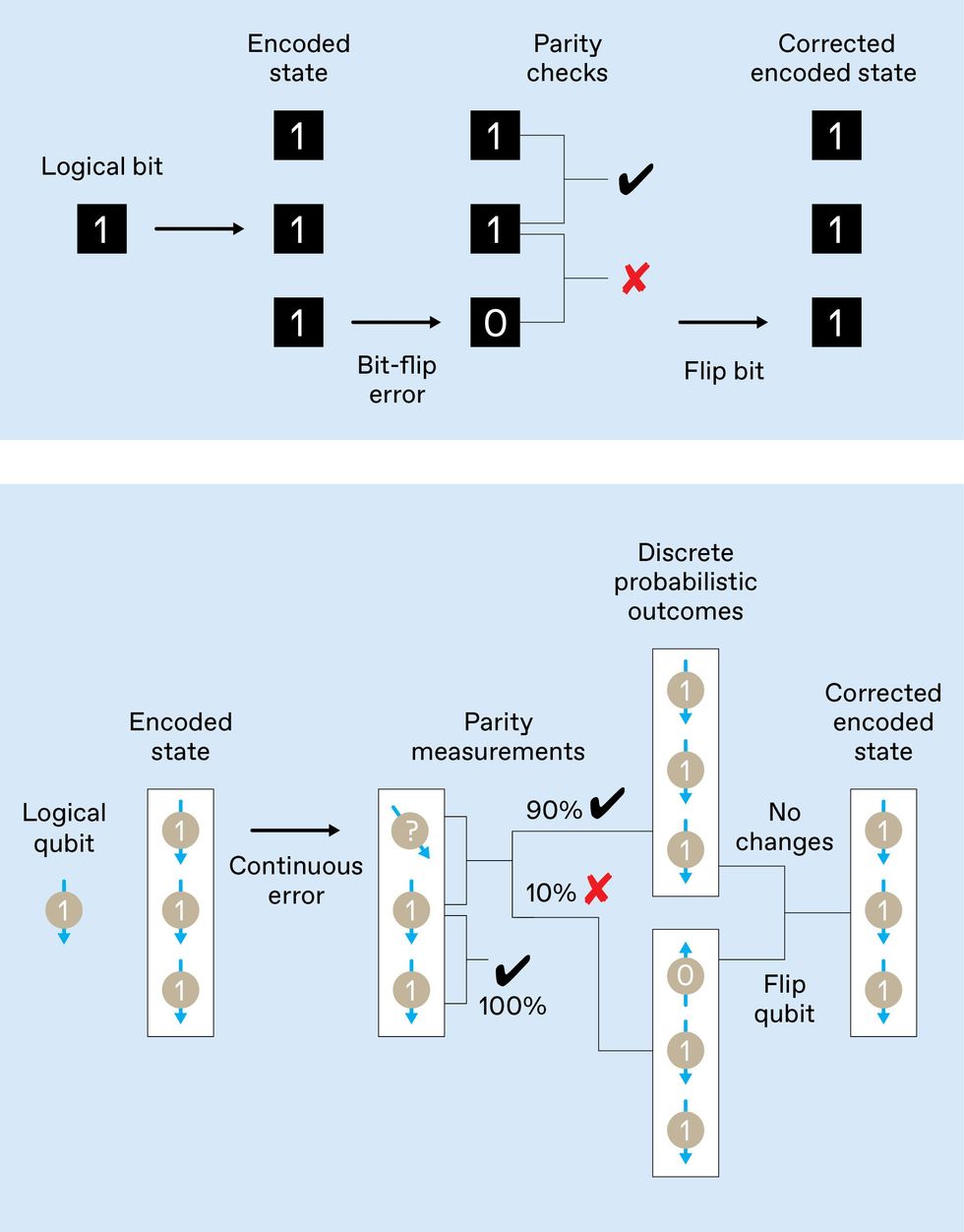

As you might expect, quantum mechanics adds some major complications when dealing with errors. Two problems in particular might seem to dash any hopes of using a quantum repetition code. The first problem is that measurements fundamentally disturb quantum systems. So if you encoded information on three qubits, for instance, observing them directly to check for errors would ruin them. Like Schrödinger’s cat when its box is opened, their quantum states would be irrevocably changed, spoiling the very quantum features your computer was intended to exploit.

The second issue is a fundamental result in quantum mechanics called the no-cloning theorem, which tells us it is impossible to make a perfect copy of an unknown quantum state. If you know the exact superposition state of your qubit, there is no problem producing any number of other qubits in the same state. But once a computation is running and you no longer know what state a qubit has evolved to, you cannot manufacture faithful copies of that qubit except by duplicating the entire process up to that point.

Fortunately, you can sidestep both of these obstacles. We’ll first describe how to evade the measurement problem using the example of a classical three-bit repetition code. You don’t actually need to know the state of every individual code bit to identify which one, if any, has flipped. Instead, you ask two questions: “Are bits 1 and 2 the same?” and “Are bits 2 and 3 the same?” These are called parity-check questions because two identical bits are said to have even parity, and two unequal bits have odd parity.

The two answers to those questions identify which single bit has flipped, and you can then counterflip that bit to correct the error. You can do all this without ever determining what value each code bit holds. A similar strategy works to correct errors in a quantum system.

Learning the values of the parity checks still requires quantum measurement, but importantly, it does not reveal the underlying quantum information. Additional qubits can be used as disposable resources to obtain the parity values without revealing (and thus without disturbing) the encoded information itself.

Like Schrödinger’s cat when its box is opened, the quantum states of the qubits you measured would be irrevocably changed, spoiling the very quantum features your computer was intended to exploit.

What about no-cloning? It turns out it is possible to take a qubit whose state is unknown and encode that hidden state in a superposition across multiple qubits in a way that does not clone the original information. This process allows you to record what amounts to a single logical qubit of information across three physical qubits, and you can perform parity checks and corrective steps to protect the logical qubit against noise.

Quantum errors consist of more than just bit-flip errors, though, making this simple three-qubit repetition code unsuitable for protecting against all possible quantum errors. True QEC requires something more. That came in the mid-1990s when Peter Shor (then at AT&T Bell Laboratories, in Murray Hill, N.J.) described an elegant scheme to encode one logical qubit into nine physical qubits by embedding a repetition code inside another code. Shor’s scheme protects against an arbitrary quantum error on any one of the physical qubits.

Since then, the QEC community has developed many improved encoding schemes, which use fewer physical qubits per logical qubit—the most compact use five—or enjoy other performance enhancements. Today, the workhorse of large-scale proposals for error correction in quantum computers is called the surface code, developed in the late 1990s by borrowing exotic mathematics from topology and high-energy physics.

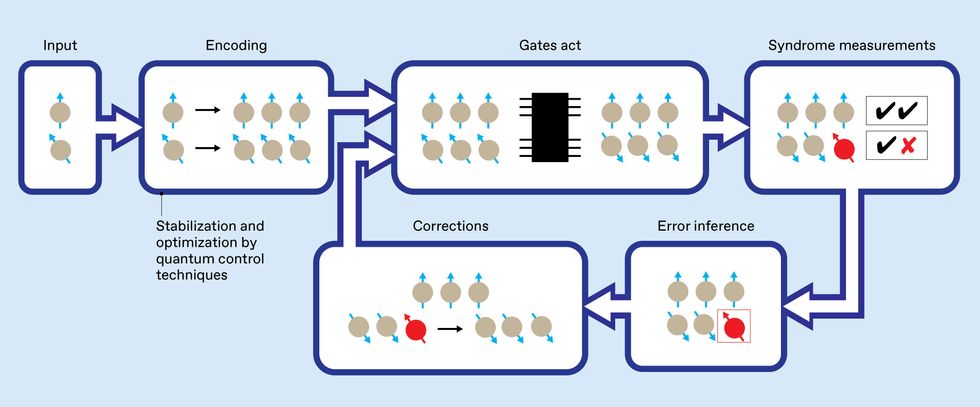

It is convenient to think of a quantum computer as being made up of logical qubits and logical gates that sit atop an underlying foundation of physical devices. These physical devices are subject to noise, which creates physical errors that accumulate over time. Periodically, generalized parity measurements (called syndrome measurements) identify the physical errors, and corrections remove them before they cause damage at the logical level.

A quantum computation with QEC then consists of cycles of gates acting on qubits, syndrome measurements, error inference, and corrections. In terms more familiar to engineers, QEC is a form of feedback stabilization that uses indirect measurements to gain just the information needed to correct errors.

QEC is not foolproof, of course. The three-bit repetition code, for example, fails if more than one bit has been flipped. What’s more, the resources and mechanisms that create the encoded quantum states and perform the syndrome measurements are themselves prone to errors. How, then, can a quantum computer perform QEC when all these processes are themselves faulty?

Remarkably, the error-correction cycle can be designed to tolerate errors and faults that occur at every stage, whether in the physical qubits, the physical gates, or even in the very measurements used to infer the existence of errors! Called a fault-tolerant architecture, such a design permits, in principle, error-robust quantum processing even when all the component parts are unreliable.

Even in a fault-tolerant architecture, the additional complexity introduces new avenues for failure. The effect of errors is therefore reduced at the logical level only if the underlying physical error rate is not too high. The maximum physical error rate that a specific fault-tolerant architecture can reliably handle is known as its break-even error threshold. If error rates are lower than this threshold, the QEC process tends to suppress errors over the entire cycle. But if error rates exceed the threshold, the added machinery just makes things worse overall.

The theory of fault-tolerant QEC is foundational to every effort to build useful quantum computers because it paves the way to building systems of any size. If QEC is implemented effectively on hardware exceeding certain performance requirements, the effect of errors can be reduced to arbitrarily low levels, enabling the execution of arbitrarily long computations.

At this point, you may be wondering how QEC has evaded the problem of continuous errors, which is fatal for scaling up analog computers. The answer lies in the nature of quantum measurements.

In a typical quantum measurement of a superposition, only a few discrete outcomes are possible, and the physical state changes to match the result that the measurement finds. With the parity-check measurements, this change helps.

Imagine you have a code block of three physical qubits, and one of these qubit states has wandered a little from its ideal state. If you perform a parity measurement, just two results are possible: Most often, the measurement will report the parity state that corresponds to no error, and after the measurement, all three qubits will be in the correct state, whatever it is. Occasionally the measurement will instead indicate the odd parity state, which means an errant qubit is now fully flipped. If so, you can flip that qubit back to restore the desired encoded logical state.

In other words, performing QEC transforms small, continuous errors into infrequent but discrete errors, similar to the errors that arise in digital computers.

Researchers have now demonstrated many of the principles of QEC in the laboratory—from the basics of the repetition code through to complex encodings, logical operations on code words, and repeated cycles of measurement and correction. Current estimates of the break-even threshold for quantum hardware place it at about 1 error in 1,000 operations. This level of performance hasn’t yet been achieved across all the constituent parts of a QEC scheme, but researchers are getting ever closer, achieving multiqubit logic with rates of fewer than about 5 errors per 1,000 operations. Even so, passing that critical milestone will be the beginning of the story, not the end.

On a system with a physical error rate just below the threshold, QEC would require enormous redundancy to push the logical rate down very far. It becomes much less challenging with a physical rate further below the threshold. So just crossing the error threshold is not sufficient—we need to beat it by a wide margin. How can that be done?

If we take a step back, we can see that the challenge of dealing with errors in quantum computers is one of stabilizing a dynamic system against external disturbances. Although the mathematical rules differ for the quantum system, this is a familiar problem in the discipline of control engineering. And just as control theory can help engineers build robots capable of righting themselves when they stumble, quantum-control engineering can suggest the best ways to implement abstract QEC codes on real physical hardware. Quantum control can minimize the effects of noise and make QEC practical.

In essence, quantum control involves optimizing how you implement all the physical processes used in QEC—from individual logic operations to the way measurements are performed. For example, in a system based on superconducting qubits, a qubit is flipped by irradiating it with a microwave pulse. One approach uses a simple type of pulse to move the qubit’s state from one pole of the Bloch sphere, along the Greenwich meridian, to precisely the other pole. Errors arise if the pulse is distorted by noise. It turns out that a more complicated pulse, one that takes the qubit on a well-chosen meandering route from pole to pole, can result in less error in the qubit’s final state under the same noise conditions, even when the new pulse is imperfectly implemented.

One facet of quantum-control engineering involves careful analysis and design of the best pulses for such tasks in a particular imperfect instance of a given system. It is a form of open-loop (measurement-free) control, which complements the closed-loop feedback control used in QEC.

This kind of open-loop control can also change the statistics of the physical-layer errors to better comport with the assumptions of QEC. For example, QEC performance is limited by the worst-case error within a logical block, and individual devices can vary a lot. Reducing that variability is very beneficial. In an experiment our team performed using IBM’s publicly accessible machines, we showed that careful pulse optimization reduced the difference between the best-case and worst-case error in a small group of qubits by more than a factor of 10.

Some error processes arise only while carrying out complex algorithms. For instance, crosstalk errors occur on qubits only when their neighbors are being manipulated. Our team has shown that embedding quantum-control techniques into an algorithm can improve its overall success by orders of magnitude. This technique makes QEC protocols much more likely to correctly identify an error in a physical qubit.

For 25 years, QEC researchers have largely focused on mathematical strategies for encoding qubits and efficiently detecting errors in the encoded sets. Only recently have investigators begun to address the thorny question of how best to implement the full QEC feedback loop in real hardware. And while many areas of QEC technology are ripe for improvement, there is also growing awareness in the community that radical new approaches might be possible by marrying QEC and control theory. One way or another, this approach will turn quantum computing into a reality—and you can carve that in stone.

This article appears in the July 2022 print issue as “Quantum Error Correction at the Threshold.”

Author

Administraroot